Linux UpSkill Challenge Day 10 through 20(End) - Cron, SFTP, logrotate, and final thoughts on the lessons

I ended up with an unexpected day off work yesterday, so I decided since I had some extra free time from coursework to finish up the rest of the days for the UpSkill challenge.

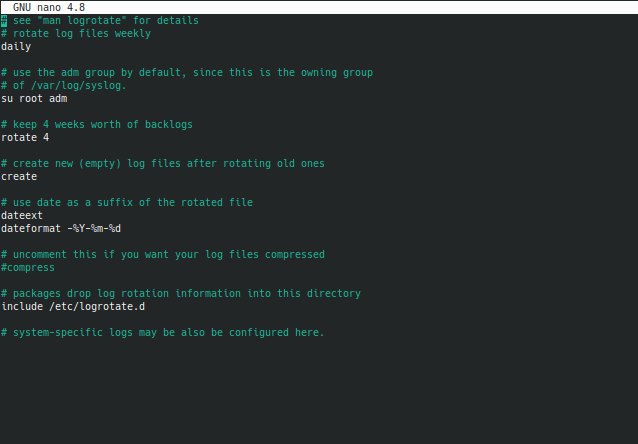

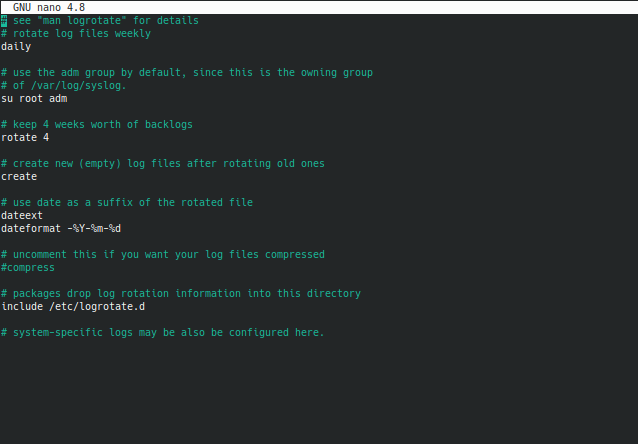

To finish up on the focus of logs that I've been making sure I get when completing this course, Day 18 covered log rotation. By going into /etc/logrotate.conf you can see what the default values are for rotating system logs. By default they are all global, but you also have the ability to specify specific rules for specific logs. Certain logs I might not care to check more than once a week, but things like auth.log or nginx/apache access and error logs I would want daily snapshots of. On this test server I didn't get that detailed, so the logrotate.conf for me looks like the image below.

I changed the first uncommented line from weekly to daily, uncommented dateext and added the dateformat line below it. Note that you can format it however you wish, what I have up in dateformat is actually the default value if I left the rest of the link blank. Set it however you prefer to read them. You can see the last commented line on system-specific logs. Let's see I only wanted to hold 1 weeks worth of auth.log files to reduce clutter. I could add a specific configuration like this.

/var/log/auth.log {

rotate 7

mail example@example.com

}

First line shows that after counting 7 log files, it will simply delete the oldest one instead of rotating it down further. This by itself will reduce clutter but it's also not exactly best practice, sometimes older logs can be important. Instead of simply deleting it, by adding in the mail line(if we setup mail service on this server) any old logs that were to be purged are instead mailed to that email address. This allows us to both reduce clutter in the directory but also maintain records of all these events on a seperate storage system. Even with everything moving to the cloud, having some data stored locally or at a different service for redundancy is very important.

Cronjobs were the other major takeaway from the last few lessons. Most good sysadmins are also lazy sysadmins, because they automate many tasks across many different servers and VMs. Cronjobs are how they get to do that. You write a script that then runs on a schedule. I wouldn't need this for the log management example I just talked about, but what if I just want a list of recently disconnected ssh attempts done to my server run everyday for me? I did a bash script last post about how to get that data and I put it in my path, a cronjob can be created that simply runs that for me once a day.

SFTP stands for SSH File Transfer Protocol, and it's a built in service for being able to send or receive files through SSH. So if I want to grab files off my server without using automation, sftp is the way to go. And if you setup your ssh connection in your config file already as I did, in my case connecting to my server is as easy as typing

sftp linuxskillup

and you are in the shell, ready to copy whatever you want. The full manual page for sftp can be seen here, but basic commands will be using get to receive from the remote host, or put to sent to the remote host. So if I want to copy over attacker.txt from this remote server to analyze it locally, I can simply type in the sftp shell

get /home/cleverness/attackers.txt /home/cleverness/Downloads

first path is the remote servers path, second is my home devices path. If you are in the current directory of the file on the remote server, the full path isn't necessary, attackers.txt would have also worked since I was in the home directory there already. And in reverse, if I want to move the file back after I examined it and dated it, in the sftp shell I'd go

put /home/cleverness/Downloads/attackers-09222020.txt /home/cleverness

Home path followed by remote path. Nice way of securely transferring files between remote locations.

Overall I had a lot of fun with this challenge. Some days were more informative than others for me since I know some stuff from running linux servers in my home already but I still learned alot. Big thanks to snori74 on Github/Reddit for hosting the challenge. I have the pages I did for this last post on the bottom, but here's the full Github page again for anyone interested in starting fresh.

Day 10 Day 11 Day 12 Day 13 Day 14 Day 15 Day 16 Day 17 Day 18 Day 19 Day 20