Linux UpSkill Challenge Day 7 through 9 - Apache, Grep, and Firewalls

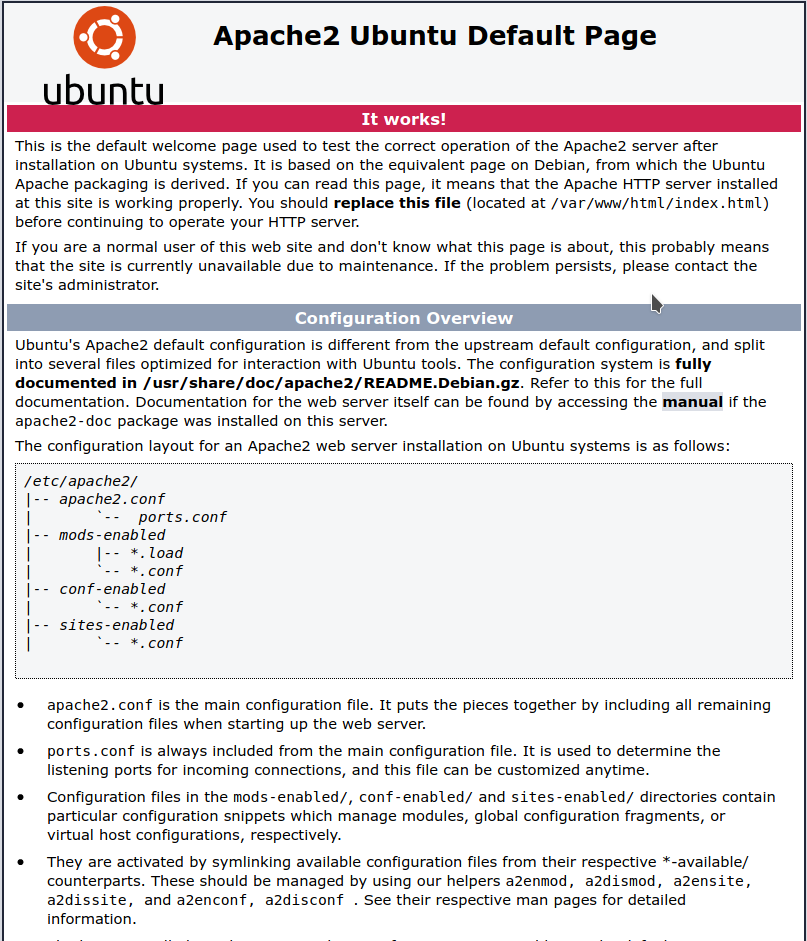

Day 7 involved installing Apache2 on my Ubuntu server, which in troubleshooting inadvertently ended up with me doing things in Day 9 until I figured out my error. Installing Apache allows you to display a webpage and route traffic on your server through Ports 80 and/or 443 depending on whether you have SSL setup. On Ubuntu 20.04 it's supposed to be a pretty simple install to get the sample page showing on your IP/Domain but I made an oversight as this was my host time hosting a cloud server.

My first step was to make sure ports were open to the internet, and really should have been my first clue as to the real solution. Checking the firewall with

sudo ufw status

showed that the firewall was actually inactive by default on this installation. Normally it should be showing as active and what ports are in the list and whether they are allowed, as seen here

cleverness@Linux-Skillup-Challenge-Ubuntu:~$ sudo ufw status

Status: active

To Action From

-- ------ ----

OpenSSH ALLOW Anywhere

Apache ALLOW Anywhere

OpenSSH (v6) ALLOW Anywhere (v6)

Apache (v6) ALLOW Anywhere (v6)

So in my infinite wisdom, I thought "maybe if the firewall is on, connections will work through port 80" even though I was connected remotely through SSH so that shouldn't be the case at all, and not how firewalls work, but I'm not smart sometimes. So I went about finding out the best way to add everything to the firewall and turning it on without accidentally locking myself out, which is apparently an easy thing to do. Instead of adding in the ports manually, which is completely viable, I ended up using the 'app list' command

cleverness@Linux-Skillup-Challenge-Ubuntu:~$ sudo ufw app list

Available applications:

Apache

Apache Full

Apache Secure

OpenSSH

Here we see available applications that can be added to the firewall based on what is installed on this system. As it's very barebones there are literally only 2 listed. OpenSSH is just 1 port and pretty obvious since I am remote, so I add that first

cleverness@Linux-Skillup-Challenge-Ubuntu:~$ sudo ufw allow OpenSSH

Then when it comes to Apache, it depends what I am going to be doing with it. If I want to use it as a reverse proxy I will probably want to do with Apache Full to allow connections from both port 80 and port 443, or Apache Secure if I want to only allow traffic through 443. As this is a temporary instance and most I'll be doing before it disappears is trying out some web templates I just selected Apache.

cleverness@Linux-Skillup-Challenge-Ubuntu:~$ sudo ufw allow Apache

Then I enabled the Firewall and hope I didnt mess anything up, because if I did I was about to get disconnected from my SSH session.

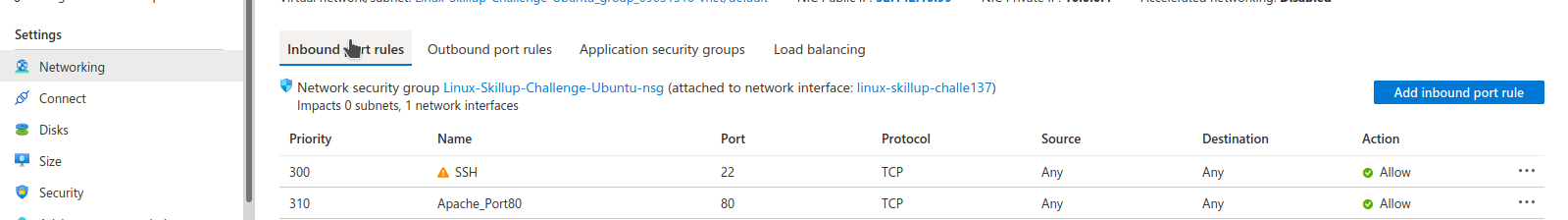

cleverness@Linux-Skillup-Challenge-Ubuntu:~$ sudo ufw enable

I wasn't disconnected so it worked, and the firewall status shows active as I displayed above earlier. But the Apache default page still wasn't loading. After some Google-fu and asking on Discord, it dawned on me that I never allowed traffic through port 80 on Azure itself. Similar to port forwarding on your home router, if you don't open the ports for the VM on Azure they won't allow traffic through it, for security reasons. Port 22 was created by default when I first spun the VM up as I requested it but it slipped my mind right after doing so that I might need to do this again.

Literally right after I added the inbound rule, the default page worked! So that solved that dilemma for me.

Day 8 dealt with using different commands for log reading and management. One of my 2 big takeaways was using tail -f /path/to/log allows for real-time log reading. So for example if on my NGINX VM I want to see real-time logs for this website, I would go

tail -f /var/log/nginx/cleverness.tech.access.log

the terminal window will then continue running, showing me when the pages are being accessed. As this site doesn't get a ton of traffic it's very easy to follow. But what if you are using a remote or local environment and many attempts per minute are being made to access your server? It's still possible to do this but being able to break it down and output it somewhere is very helpful as a System Administrator. That's where grep, the second big takeaway from Day 8 came in.

grep allows you to search and filter words and phrases from files, but it can also be used to further narrow down a previous grep search. So if after examining logs I see many failed attempts at logging in with different usernames, I can narrow it down with something like

grep "authenticating" /var/log/auth.log| grep -v "root"

It'll first filter the lines in auth.log with authenticating in them, and then further filter them down to ones that include root. The Day 8 course that goes into how to format the output using the cut command, and outputting that output to a text file for future reading and analysis.

grep "authenticating" /var/log/auth.log| grep -v "root"| cut -f 10- -d" " > attackers.txt

Depending on the log you are viewing and the line layout you may have to adjust the values abit, but it will leave you with an output of only IP addresses which you can use for future analysis. And since auth.log logs many different connection types with different message, you can just change the grep inputs and once you get the output you desire, append it to the end attackers.txt with >> instead of > for a large file.

Instead of cut there is another program called awk which is more difficult to use but gives you more control over where you are pulling out from a file if you know where it is. I found a nice example off this Github page

grep -rhi 'invalid' /var/log/auth.log* | awk '{print $10}' | uniq | sort > ~/ips.txt

This will grab the IPs from the 10th field after only grabbing the lines containing invalid, only list uniq values to avoid repeats, and sort them and pipe the output out to ips.txt I tried looking into using an alias for grep and alias are not really super friendly when it comes to accepting multiple inputs like I would think about using for this situation, so I ended up learning a bit abunch bash functions.

Bash functions are custom functions you can write that can be executed any time, and in my case I can also pass through some values into them if I write them that way. First I need to open the file I need to edit for all this to work like so

nano ~/.bashrc

At the very bottom of the bash file is where I am going to add in my function.

authscan() {

grep -rhi "$1" /var/log/auth.log* | awk '{print $10}' | uniq | sort

}

If you look at the line for authscan() you can see it's very similar to the last grep line with a small difference; The "$1" is where I want to pass through a specific value when running the function. As I am only going to pass in 1 grep argument I am going to only pass in 1, but if I wanted to scan for different logs I could add a "$2" where the log file path is defined. However if doing so, you might need to append awk manually when calling the function as it's very precise where it prints from. Like my Apache and NGINX logs start with the IP address right at the beginning of the line in the logs, while auth.log will have it somewhere else depending on the type of connection occurring.

After saving and exiting, run the following to reload bash without disconnecting you from your environment

exec bash

Now to test it, I simply run the following to make sure I get the same output as the full grep command from earlier.

authscan 'invalid' > ips2.txt

And I end up with the same text file. So if I don't feel like hitting the up arrow 10-40 times to look for the grep command I can use bash functions on my actual servers to get the same result. The grep code I found isn't 100% perfect in filtering out everything that isn't an IP due to how wildly different the sentence structure is in auth.log, but in the apache2 access log it actually works flawlessly since the first field on each line in those logs is the connecting IP. So for my NGINX VM by swiping the 10 for 1 in the print portion I get a much cleaner list of IPs in my output.